Hate

speech detectors are easily tricked. A test of systems designed to

identify offensive speech online shows that a few innocuous words or

spelling errors can easily trip them up. The results cast doubt on the

use of technology to tame online discourse.

N. Asokan at Aalto University in Finland and colleagues investigated

seven different systems used to identify offensive text. These included a

tool built to detoxify bitter arguments in Wikipedia’s edits section, Perspective – a tool created by Google’s Counter Abuse team and Jigsaw, …

https://www.newscientist.com/article/2178965-googles-ai-hate-speech-detector-is-easily-fooled-by-a-few-typos/

skip to main |

skip to sidebar

Communalism all day everyday (hk 2015)

extract from a photo on street

Seeds of Hate - Picture of Bricks collected by the Hindu right Ram Janmabhoomi movement (Photo PTI)

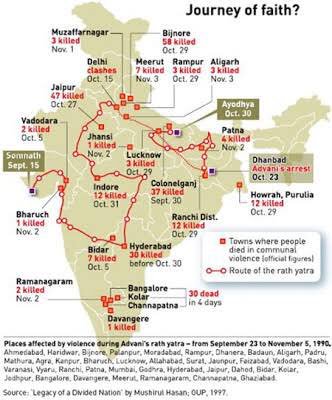

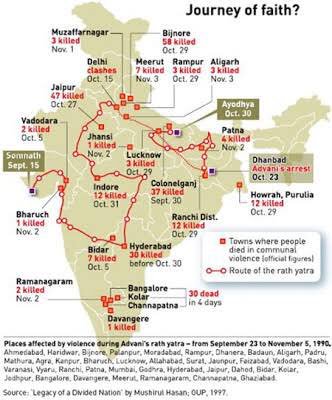

Journey of Faith? - Places affected by violence during BJP's Rath Yatra

Resources for all concerned with culture of authoritarianism in society, banalisation of communalism, (also chauvinism, parochialism and identity politics) rise of the far right in India (and with occasional information on other countries of South Asia and beyond)

Subscribe via email

see Comunalism Watch on mobile phones

Archive

Links

- Fast Track To Troubling Times: 100 Days of Narendra Modi – A Counter Report

- Hindu Nationalism in the United States: A Report on Nonprofit Groups

- Gujarat Carnage 2001-2010

- Communalism Combat

- Anhad

- Sahmat News

- Plural India

- Citizens For Justice and Peace

- Coalition Against Communalism

- For Defence of Teesta Setalvad

- Secular Democracy

- Citizens For Peace

- South Asia Citizens Web

- Truth of Gujarat

- Desh Kosh

- Feku

- Vision Jafri

- Narendra Modi Facts

- Orissa Burning: The anti christian pogrom of Aug-Sep 2008

- Remembering 1992

- Thus Spake Srikrishna

- Janta Ka Aina

- Onlinevolunteers org

- Campaign to Stop Funding Hate

- Awaaz South Asia

- Coalition Against Genocide

- Sangh Samachar

- Stop The Hatred

- Barbarta Kay Virudh: An anti fascist blog in Hindi

- The Pink Chaddi Campaign

- Ban Vishwa Hindu Parishad

- Report of Indian People's Tribunal on Communalism in Orissa

- The Truth on Gujarat 2002 - A Tehelka investigation

- Communalism and Religious Fundamentalism in India: A Resource File [A PDF file]

- Secularism is a women's issue

- Centre for Secular Space

- Human Rights for All

- Gulail

- Holy Cow & Other Bull

- Searchlight Magazine

- Anti Fascist Network

- Ras l'front

Pages

Tags / Keywords

Translate

Feed from Dilip Simeon's blog

Communalism all day everyday

Communalism all day everyday (hk 2015)

See Therapist for "Hurt Sentiments"

Freedom from Hindutva

extract from a photo on street

Seeds of Hate - Picture of Bricks collected by the Hindu right Ram Janmabhoomi movement (Photo PTI)

Map of L K Advani's Rath Yatra of 1990

Journey of Faith? - Places affected by violence during BJP's Rath Yatra

About Us / Disclaimer

This is a collaborative space run by an informal collective of people from across India and elsewhere. The blog was started many years ago under the aegis of South Asia Citizens Web. All web content placed here is done in public interest; it may be freely used by people for non commercial purposes. Please remember to give credit to original copyrighted sources and seek permission for further use.

Disclaimer: Posting of content here does not constitute endorsement by the Communalism Watch Cooperative.

Disclaimer: Posting of content here does not constitute endorsement by the Communalism Watch Cooperative.